Before ChatGPT, There Was ELIZA: Watch the 1960s Chatbot in Action

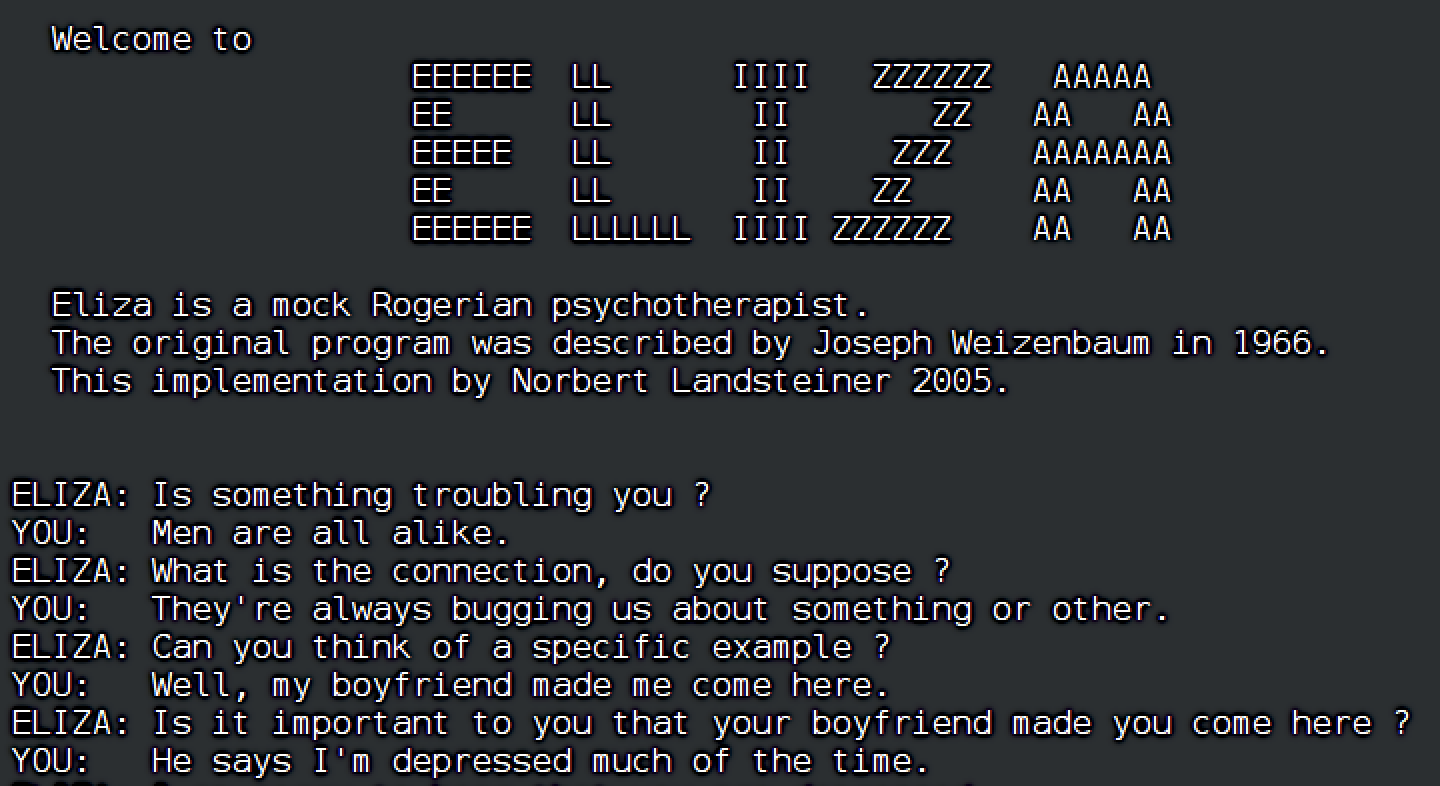

In 1966, the sociologist and critic Philip Rieff published The Triumph of the Therapeutic, which diagnosed how thoroughly the culture of psychotherapy had come to influence ways of life and thought in the modern West. That same year, in the journal Communications of the Association for Computing Machinery, the computer scientist Joseph Weizenbaum published “ELIZA — A Computer Program For the Study of Natural Language Communication Between Man and Machine.” Could it be a coincidence that the program Weizenbaum explained in that paper — the earliest “chatbot,” as we would now call it — is best known for responding to its user’s input in the nonjudgmental manner of a therapist?

ELIZA was still drawing interest in the nineteen-eighties, as evidenced by the television clip above. “The computer’s replies seem very understanding,” says its narrator, “but this program is merely triggered by certain phrases to come out with stock responses.” Yet even though its users knew full well that “ELIZA didn’t understand a single word that was being typed into it,” that didn’t stop some of their interactions with it from becoming emotionally charged. Weizenbaum’s program thus passes a kind of “Turing test,” which was first proposed by pioneering computer scientist Alan Turing to determine whether a computer can generate output indistinguishable from communication with a human being.

In fact, 60 years after Weizenbaum first began developing it, ELIZA — which you can try online here — seems to be holding its own in that arena. “In a preprint research paper titled ‘Does GPT‑4 Pass the Turing Test?,’ two researchers from UC San Diego pitted OpenAI’s GPT‑4 AI language model against human participants, GPT‑3.5, and ELIZA to see which could trick participants into thinking it was human with the greatest success,” reports Ars Technica’s Benj Edwards. This study found that “human participants correctly identified other humans in only 63 percent of the interactions,” and that ELIZA, with its tricks of reflecting users’ input back at them, “surpassed the AI model that powers the free version of ChatGPT.”

This isn’t to imply that ChatGPT’s users might as well go back to Weizenbaum’s simple novelty program. Still, we’d surely do well to revisit his subsequent thinking on the subject of artificial intelligence. Later in his career, writes Ben Tarnoff in the Guardian, Weizenbaum published “articles and books that condemned the worldview of his colleagues and warned of the dangers posed by their work. Artificial intelligence, he came to believe, was an ‘index of the insanity of our world.’ ” Even in 1967, he was arguing that “no computer could ever fully understand a human being. Then he went one step further: no human being could ever fully understand another human being” — a proposition arguably supported by nearly a century and a half of psychotherapy.

Related content:

A New Course Teaches You How to Tap the Powers of ChatGPT and Put It to Work for You

What Happens When Someone Crochets Stuffed Animals Using Instructions from ChatGPT

Noam Chomsky Explains Where Artificial Intelligence Went Wrong

Based in Seoul, Colin Marshall writes and broadcasts on cities, language, and culture. His projects include the Substack newsletter Books on Cities, the book The Stateless City: a Walk through 21st-Century Los Angeles and the video series The City in Cinema. Follow him on Twitter at @colinmarshall or on Facebook.